Chronic myeloid leukemia (CML) is a progressive and potentially fatal myeloproliferative disorder with over 5,000 new cases in the US each year, accounting for approximately 20 % of all leukemias diagnosed in adults.1 The natural history of CML consists of three distinct stages: chronic, accelerated, and blast phase.2 Most patients (90 %) are diagnosed in the chronic phase, a relatively slowly progressing stage that is primarily asymptomatic.3 However, unless the disease is controlled or eliminated, patients eventually progress to an intermediate accelerated phase characterized by poor control of white blood cell counts and increasing numbers of immature blasts in the peripheral blood. After one to two years, the disease transitions into the terminal blast phase,4 which resembles acute leukemia, leading to metastasis, organ failure, and death.2 The disease is characterized by the expansion of a clone of hematopoietic cells that carries the Philadelphia (Ph) chromosome. The Ph chromosome results from a balanced translocation between chromosomes 9 and 22,2 the molecular consequence of which is the constitutive overexpression of a novel fusion gene, BCR-ABL. The BCR-ABL fusion protein contains a constitutively active tyrosine kinase region that deregulates cell growth, motility, angiogenesis, and apoptosis, leading to the development of leukemia.3

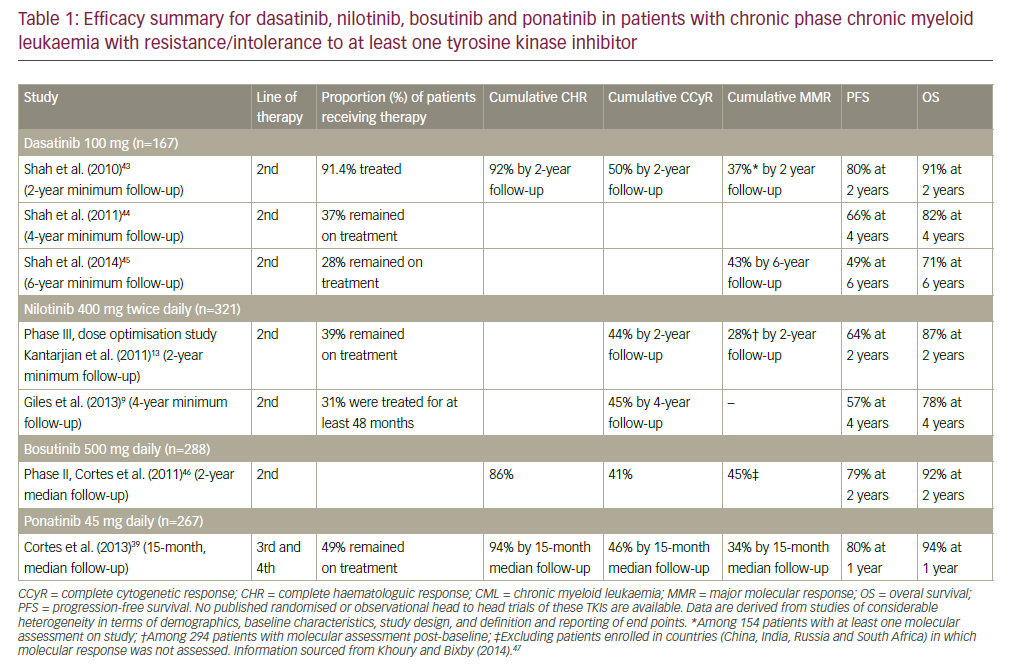

Targeted inhibition of the oncogenic function of this constitutively active kinase is a highly effective and now standard therapeutic approach for patients with CML. Standard front-line therapy for CML patients is imatinib mesylate (Gleevec®, Novartis Pharmaceuticals, Basel, Switzerland), the first tyrosine kinase inhibitor (TKI) to be approved by the US Food and Drug Administration (FDA) for the treatment of CML.5 The rates of complete cytogenetic response among patients receiving imatinib were 68 % at 12 months and 87 % at 60 months.6,7 The estimated overall survival of patients who received imatinib as initial therapy was 89 % at 60 months, in marked contrast to survival rates prior to imatinib availability.7 However, patients with CML can exhibit varying responses to first-line treatment with imatinib owing to primary (intrinsic) or secondary (acquired) resistance and these patients have a higher risk of disease progression.8 The currently approved secondgeneration tyrosine kinase inhibitors (TKIs), nilotinib (Tasigna®, Novartis Pharmaceuticals, Basel, Switzerland) and dasatinib (Sprycel®, Bristol- Myers Squibb, New York, USA), are effective for the treatment of patients with imatinib-resistant and imatinib-intolerant disease and are also indicated as first-line treatments for CML in the US.9–12 The early evaluation of response during the course of therapy is important for ascertaining whether therapy is progressing according to expectations that correlate with optimal outcome. Therefore, frequent disease monitoring to assess treatment and to detect failure or suboptimal response is recommended and is one of the key management strategies of CML.13–15 Monitoring Disease Response to Tyrosine Kinase Inhibitor Therapy in Chronic Myeloid Leukemia

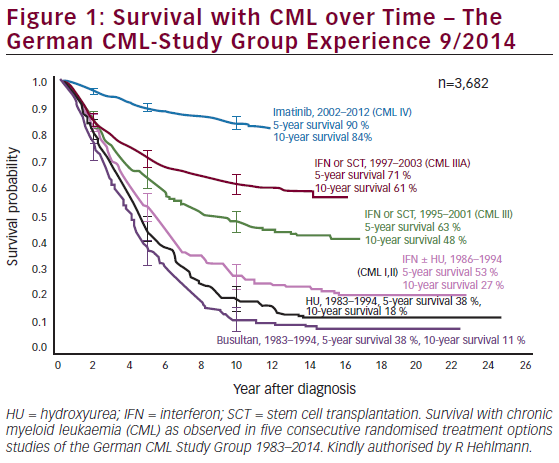

The therapeutic progress of CML patients receiving first-line TKI therapy is measured by reaching a continuum of milestones, assessed using techniques of increasing sensitivity (see Figure 1).13,16

Hematological Response

A complete hematological response (CHR) is defined as the complete normalization of peripheral blood counts and spleen size. Blood counts and differentials are required bi-weekly until a CHR has been achieved and confirmed and then at least every three months thereafter. The treatment goal is to achieve a CHR within one to three months after the start of treatment; the majority of imatinib-treated patients achieve this goal.7,17

Cytogenetic Response

Cytogenetic monitoring is the most widely used technique to monitor treatment response in patients with CML.14 Cytogenetic response is determined by bone marrow metaphase chromosome analysis and is based on the number of Ph+ metaphases. A complete cytogenetic response (CCR) indicates that a patient has no Ph+ metaphases. The National Comprehensive Cancer Network (NCCN) clinical practice guidelines for CML recommend monitoring the bone marrow for cytogenetic response at six, 12, and 18 months following imatinib therapy. The majority of patients achieve a CCR rapidly within 12 months of commencing TKI therapy.7,10,12 An update of the pivotal Phase III International Randomized Study of Interferon and STI571 (IRIS) study after five years of follow-up revealed that continuous treatment of chronicphase CML with imatinib induced a CCR in 87 % of patients at some point during therapy, which translated into impressive long-term outcomes.7 Conversely, a lack of a CCR at 18 months is considered imatinib failure and a switch to a second-generation TKI is recommended.14 Similarly, the cytogenetic result over the first 12 months of nilotinib or dasatinib therapy after imatinib failure may be used to guide treatment.14,18

Molecular Response

Molecular response is defined by the magnitude of reduction in peripheral blood BCR-ABL transcript levels. Imatinib-treated patients who achieved a CCR may still have detectable BCR-ABL messenger RNA (mRNA) levels and more sensitive molecular methods are therefore required to detect and quantify levels of residual disease.19 BCR-ABL molecular monitoring in patients with CCR during TKI treatment using realtime quantitative polymerase chain reaction (RQ-PCR) is the most sensitive tool for assessing disease burden in patients with CML.20 As well as detection of minimal residual disease, BCR-ABL monitoring can serve as a marker of resistance to TKI therapy and relapse; it may also serve to identify periods of poor treatment compliance.21,22

A complete molecular response (CMR) occurs when there is no detectable BCR-ABL mRNA levels in the blood; a major molecular response (MMR) is defined as a >3-log reduction in BCR-ABL/control gene ratio compared with the median pre-treatment level. The European LeukemiaNet and the NCCN recommend reverse transcriptase PCR (RT-PCR)-based molecular monitoring every three months until a response occurs and every three to six months thereafter.14,15 A close concordance between the results obtained with BCR-ABL monitoring and cytogenetic response has been observed.23,24 Furthermore, the achievement of an MMR (at 12 or 18 months post-imatinib initiation) predicts superior long-term clinical outcomes with imatinib therapy. For example, the five-year follow-up in the IRIS study showed that no patients progressed to the accelerated or blast phase after 12 months if a CCR and an MMR were achieved.7 The estimated progression-free survival (PFS) at 24 months was 100 % for patients who received CCR and MMR at 12 months.7 Failure to achieve an MMR during imatinib therapy was associated with inferior outcomes, including a significantly shorter PFS,25 and may signal a ‘warning feature’ that these CML patients require more frequent monitoring or are experiencing a suboptimal response.15 Molecular response is also correlated with long-term outcomes in patients treated with second-generation TKIs, although earlier and more frequent testing may be appropriate with these agents because responses are more rapid.26,27 The achievement of an MMR has therefore become both a consensus goal of CML therapy14,15 and a surrogate response end-point in CML clinical trials.10 Several studies have shown that elevations in BCR-ABL transcript levels might indicate the emergence of potential for BCR-ABL kinase mutations that confer resistance to imatinib.28,29 The magnitude of the increase that may predict for such events is variable, in part because of the variability of the testing in different laboratories, and may range from greater than two-fold to 10-fold.15 Marin et al. demonstrated that a confirmed doubling of BCR-ABL levels was a significant factor for predicting loss of CCR and progression to advanced phase provided that the eventual BCR-ABL/ABL transcript level exceeded 0.05 %.30 Rising levels of BCR-ABL transcripts may indicate the need for more frequent laboratory monitoring (molecular and cytogenetic), but there are currently no specific NCCN guidelines for changing therapy based solely on rising BCR-ABL transcripts: these decisions need to be supported by bone marrow cytogenetics.14 Screening for BCR-ABL kinase domain mutations may be considered if there is a loss of response to imatinib (or inadequate initial response) and may also be helpful in the selection of subsequent TKI therapy.14,15

Current Approaches and Challenges to BCR-ABL Monitoring Overview of the BCR-ABL Test Process

The RQ-PCR analytical process consists of multiple steps, which are summarized in Figure 2. Briefly, peripheral blood is collected and total leukocytes are recovered by the lysis of red blood cells. RNA is then extracted from the leukocytes and reverse-transcribed to complementary DNA (cDNA). To account for variation (between samples and/or laboratories) in RNA extraction and the efficiency with which the RNA is reverse-transcribed, the number of copies of BCR-ABL is related to a housekeeping control gene.31 Thus, two measurements are made by RQ-PCR for all samples: estimates of the number of transcripts of both BCR-ABL and the control gene. The BCR-ABL and control gene transcripts are then quantified: BCR-ABL-positive samples are expressed as a ratio of BCR-ABL transcript numbers and control gene transcripts.

BCR-ABL Monitoring Tests in the US and Abroad

The majority of BCR-ABL testing in the US and Europe is performed using laboratory-developed tests (LDTs, sometimes referred to as ‘home brew’ tests).32 These tests are generally manufactured, including being developed and validated, and offered within a single laboratory; as such, they are not actively regulated by the FDA. In the US, a number of organizations (e.g. Quest Diagnostics, Labcorp), reference laboratories, and molecular pathology laboratories perform BCR-ABL testing using LDTs as a service under the jurisdiction of the Clinical Laboratory Improvement Act (CLIA) of 1988. In addition, commercial BCR-ABL tests developed by diagnostic manufacturers are available (e.g. GeneXpert®, Cepheid; LightCycler® t[9;22], Roche), none of which has been cleared by the FDA. The lack of standardization among BCR-ABL LDTs results in variability in the RQ-PCR system components and processes that are used across different US and international laboratories. For example, there are three recommended control genes that are suitable for normalization of BCR-ABL RQ-PCR results,31,33 although others may be used depending on the preference of the testing laboratory.32 In addition to control gene variation, an international study by Branford and colleagues encountered considerable variation in instrumentation and methodologies across 38 participating laboratories.34 An inter-laboratory comparison of BCR-ABL monitoring in a study of 38 North American laboratories also reported significant variation in the analytical systems and processes used.35 Such methodological variations in BCR-ABL testing may explain the lack of comparability of results between (and sometimes within) centers. Indeed, Branford and colleagues observed a high degree of laboratory-to-laboratory variability in BCR-ABL measurements among 19 LDTs, with the average difference in BCR-ABL measurements between each method and a reference method ranging from 7.7-fold lower to 8.1-fold higher.34 A similar discordance of BCR-ABL results across laboratories was reported in other studies.35,36 RQ-PCR methodology is complex and requires considerable attention to detail to ensure reproducible results. Hence, as well as variation among methods, methodological shortcomings such as suboptimal procedures, performance problems, and operator error may impact on the accuracy and reproducibility of BCR-ABL testing.37 Taken together, the variability and performance problems associated with BCR-ABL testing using LDTs have implications for CML patients and physicians when BCR-ABL transcript levels are used to inform clinical decisions.

Implications of BCR-ABL Laboratory-developed Test Shortcomings and Performance Problems

Inaccurate BCR-ABL reporting could potentially misinform physician decisions, with resulting patient care, disease, and economic implications.16 For example, a suboptimal test that reports a false-positive or over-quantification result could lead to an unnecessary bone marrow cytogenetic test, which is painful for the patient and expensive, or TKI dose escalation, which could lead to increased toxicities. In addition, false-negative reporting or under-quantifying BCR-ABL transcripts could lead to signs of relapse, resistance to therapy, or problems with therapy compliance being missed. Aside from inaccurate reporting, incomparability of results between laboratories could impede the ability of a patient to procure a second opinion at a different medical center. Furthermore, if a patient transitions to another laboratory owing to relocation or a change in jobs and/or health insurance, the results may not be in accord with their testing history, resulting in confusion about BCR-ABL level trends. Although BCR-ABL monitoring is recommended in US and European guidelines for monitoring signs of relapse and therapy resistance, test accuracy and variability issues such as those discussed above are a potential reason why molecular monitoring is not recommended for more extensive use in treatment decisions.14

Current Efforts to Implement BCR-ABL Monitoring Standardization

Issues with BCR-ABL monitoring are recognized within the CML community and the standardization of testing is accepted as an unmet need.32 However, as highlighted recently by Cross,32 a number of factors make standardization of BCR-ABL monitoring particularly challenging, including the technical complexity of the RQ-PCR assay and lack of consensus on the best methodology. Nevertheless, several international efforts have attempted to address this issue. The International Scale (IS) benchmark for normalizing the variance seen among assays is a significant step towards standardization of BCR-ABL measurements. This benchmark was established in the IRIS trial, which defined 100 % IS as the standardized baseline and 0.1 % IS as an MMR.7 Because of the variability in RQ-PCR processes and components between laboratories, laboratory-specific conversion factors are employed to convert results to IS. This approach allows laboratories to continue using existing procedures (thereby generating results that are comparable to those they have generated in the past) and facilitates comparison of results between laboratories.38 However, the application of these factors is complex, time-consuming, and expensive,32 which has meant that the IS for BCR-ABL reporting is not yet broadly adopted in the US.13 Several international studies were conducted to develop and test standardized components of the RQ-PCR analytical system, including a standardized protocol for RQ-PCR analysis, control genes, and materials for standard curve calibration.31,39,40 The results of these studies demonstrated the positive impact of standardization and contributed to the development of harmonization guidelines that aimed to improve the quality and reproducibility of BCR-ABL detection methods.33,37 Despite the importance of these efforts and potential benefits, suboptimal procedures and performance problems within laboratories may mean that, even with the adoption of common laboratory procedures, comparability of results between laboratories may not necessarily follow.

An alternative approach to facilitating reproducible BCR-ABL monitoring results across laboratory settings would be the use of a commercial assay that is provided to the user as a validated, standardized, and complete kit. A self-contained and automated test that is defined as an ‘in vitro diagnostic product,’ and thus is subject to stringent FDA review and validation to satisfy criteria for clinical use, may be an effective method to mitigate LDT shortcomings while preserving the advantages of current standardization efforts. Through reproducible manufacturing of materials, such a platform could greatly reduce the potential for component variation, and the minimal hands-on time due to assay automation may address performance issues such as operator errors.

Furthermore, BCR-ABL molecular monitoring is currently not optimally adopted41 and the availability of an FDA-approved, widely available test could improve utilization rates. Citing the PCR techniques used to measure viral load in HIV patients, Hughes and colleagues highlight the precedent for standardized commercial disease monitoring kits to evolve from LDTs, suggesting that BCR-ABL monitoring may follow the same pattern.33 Ongoing efforts to this end include the US commercialization of the Xpert® BCR-ABL assay from Cepheid. This RQ-PCR platform has been tested and validated in several published studies,42–45 has a CE Mark in Europe, and has been available outside the US since 2006. A collaboration between Novartis and Cepheid to perform the studies necessary to gain FDA approval of this BCR-ABL test is ongoing.

Summary and Conclusions

Routine molecular monitoring of CML patients using RQ-PCR is of clinical value, but the BCR-ABL testing landscape is fragmented, with significant use of LDTs in the US. The use of diverse tests with variable protocols and validation approaches that lack robust quality control and assurance standards can make interpretation of results difficult, with resulting implications for patient care. The need to standardize BCR-ABL testing is accepted within the CML community and significant advances towards this end have been made in recent years. However, standardization is a challenging and expensive process and, even if standardization guidelines are followed, performance problems within laboratories may still result in assay variability and suboptimal results. In addition to ongoing standardization efforts, the availability of an FDA-approved BCRABL testing kit in the US may provide a superior monitoring technology, with a resultant beneficial impact on patient management.