Almost daily, and from many media sources in the US, one is confronted with the disquieting demographic fact of the early 21st century that the baby ‘boomers’ are getting older. This fact introduces many of the grants reviewed at the National Institutes of Health (NIH), which emphasize the growing population at risk for cancer development. Of course, this fact seems to inform almost every disease analysis that could possibly be age-dependent, as well as some lesser known facts such as that as people age, they are less likely to relocate, which is illustrated by the statistic that the moving rate drops from 70% in those who are 20–29 years old, to 9% in 45–65 year-olds. Perhaps as the boomers grow older, they will have their cancer treated in more non-urban settings, further stressing the supportive care networks. Unfortunately, not all cancer treatments may be applied uniformly throughout the US, making this relocation statistic even more interesting. To quote an important finding from the Surveillance, Epidemiology, and End Results (SEER) data provided in 2004:

“The continued measurable declines for overall cancer death rates and for many of the top 15 cancers, along with improved survival rates, reflect progress in the prevention, early detection, and treatment of cancer. However, racial and ethnic disparities in survival and the risk of death from cancer, and geographic variation in stage distributions suggest that not all segments of the US population have benefited equally from such advances.”1

The diversity of the 76 million baby boomers born between 1946 and 1964, and their projected longer life span, create challenges for all those examining these facts. These demographic issues also inform the discussion of the use of myeloid colony stimulating factors (CSFs) in cancer. Their use is projected to increase and is not uniformly applied across the US, and the new molecular advances in translational cancer medicine may yet alter their use as well.

There are four general ways that CSFs are used in general cancer treatment in a non-transplant setting. The first is as a primary prophylactic measure to prevent severe neutropenia with its attendant morbidity and mortality risks and cost consequences. The ASCO guidelines point out that cost analyses have shown that CSFs save money when the risk of febrile neutropenia (FN) is greater than 40%.2 These guidelines also point out that most moderate-dose-intensity solid tumor cancer regimens have FN rates of approximately 15%, and that primary prophylaxis is not a regular approach for these chemotherapies. The exception to this rule is the older patient where the 15% assumption is not correct. In nine clinical trials of older patients with large cell non-Hodgkin’s lymphoma, the risk of FN was between 21% and 47%, and many authors indicate that primary prophylaxis is appropriate for patients receiving moderate intensity chemotherapy who are aged 70 years or older.3 Furthermore, advances in treatment have made the combination of monoclonal antibody therapy, using Rituxan™, with chemotherapy the standard of care, or at least the benchmark of care, in the lymphoid malignancies, lymphoma, and chronic lymphocytic leukaemia (CLL). This has resulted unexpectedly in increased myelosuppression in some of these combination regimens. Thus, the on-coming aging boomers will require increased use of CSFs in these clinical situations. Clinical practice for primary prophylaxis is also quite varied in the US, as suggested by the aforementioned SEER results statistics, indicating disparity of outcomes. Several studies have indicated that compliance with ASCO guidelines for primary prophylaxis with CSFs is not much better than 50%,4 with more use rather than less being the most common.

The second use of myeloid CSFs is as secondary prophylaxis, which means incorporating their administration after a patient has had an episode of FN or severe neutropenia. Here, too, there is controversy regarding the alternative choice of dose reduction of the chemotherapy as a means of preventing another event, or keeping the same chemotherapy and initiating the use of myeloid CSFs. In practice, CSFs are routinely given at this point whether dose modification is made or not.

The third use of CSFs in cancer is for myeloid recovery after dose intense chemotherapy such as in the acute leukemias. In this setting, all patients will have severe neutropenia, and the majority will have fever and require hospitalization.The aim in this clinical situation is to accelerate myeloid recovery after treatment, reduce the duration of antibiotic therapy, attempt to reduce the complications of infection, and shorten the hospital stay. This use has been endorsed by the ASCO guidelines,2 especially in the older patient, is economically sound, and is almost uniformly adopted in US practice.5 Again, demographic facts indicate the expected increase in this use with the aging boomers, as numbers of patients with acute myeloid leukaemia (AML) will rise with the graying tide. In addition, the hematologic disorder termed myelodysplastic syndrome (MDS) is more common than AML in older individuals and it too is on the rise.The first and only FDA-approved chemotherapy (Vidaza™) for MDS was approved this year. Its use in MDS will undoubtedly increase the use of CSFs. The use of CSFs during Vidaza™ treatment will be predominantly in line with both primary and secondary prophylaxis guidelines from ASCO. Published reports indicate granulocytopenia occurred in 58% of patients treated with this agent, suggesting that this treatment will meet ASCO guidelines for primary prophylaxis.6

The fourth use of CSFs in cancer has been its use as a ‘priming’ agent given before chemotherapy to increase the effect of chemotherapy. This has been studied in the myeloid leukemias with mixed results. In general, this approach has not been successful in most trials; however, a recent report of improved disease-free survival (DFS) has produced a resurgence of interest in this approach.7 After an average follow-up of 55 months, patients in complete remission who received their induction chemotherapy plus granulocyte (G)-CSF had a higher rate of DFS than patients who did not receive G-CSF. G-CSF did not improve the outcome in the subgroup of patients with an unfavorable prognosis based on their cytogenetic findings. In the 72% of patients with standard-risk AML, there was an overall survival at four years of 45%, compared with 35% in the group that did not receive G-CSF. This intriguing observation may spur more research.

So what factors may influence the continued use of the myeloid CSFs in cancer therapy in the future? One of the most touted advances in our treatment approach to cancer is the development of molecularly targeted agents. These treatments in general will not produce the myelosuppression that we have been discussing with chemotherapy. The expected result is already apparent, and diseases that respond to these drugs can be treated without chemotherapy. It is hoped that more such agents will arrive in the future, especially for the severe diseases such as leukemia. Already, second-line therapy clinical trials are being designed with these agents, especially in the older patient. Obviously, this will decrease the use of CSFs in cancer in general, as more agents come on-line. Finally, one can only imagine that molecular agents may mimic the CSFs themselves. The CSFs produce their myeloid stimulatory effect at a cellular level. This effect is the result of the transmission of signals from the cell receptor site for the CSF (G-CSF or granulocyte-macrophage (GM)-CSF) through signal transduction pathways in the cell to the nuclear processes that regulate the cell cycle, division, and maturation. These intracellular pathways themselves can be the target of molecular therapies that mimic the action of CSFs. This promise may be on the horizon in any laboratory at this moment. In the future this option may increase choices and provide new agents beyond today’s CSFs. ■

Colony Stimulating Factors in Solid Tumor Cancer and Acute Leukemia

Article

References

- Annual Report To The Nation On The Status Of Cancer, 1975–2001,With A Special Feature Regarding Survival. Ahmedin Jemal, Limin X, Clegg E,Ward L A, Ries G, Xiaocheng Wu, Patricia M, Jamison P A,Wingo H L, Howe R N, Anderson B K, Edwards Cancer (2004), 101: pp. 3–27.

- Ozer H,Armitage J O, Bennett C L, et al.,“2000 Update of Recommendations for the Use of Hematopoietic Colony-Stimulating Factors: Evidence-Based, Clinical Practice Guidelines”, J. Clin. Oncol. (2000), 18 (20): pp. 3,558–3,585.

- Lodovico Balducci,Lyman G H,Howard Ozer,“Patients Aged 70 Are at High Risk for Neutropenic Infection and Should Receive Hemopoietic Growth Factors When Treated With Moderately Toxic Chemotherapy”, J. Clin. Oncol. (2001), 19: pp. 1,583–1,585.

- Debrix I, Madelaine I, Grenet N, Roux D, Bardin C, Le Mercier F, Fontan J E, Connor O, Pointereau A and Tilleul P,“Impact Of ASCO Guidelines For The Use Of Hematopoietic Colony Stimulating Factors (CSFs): Survey Results Of Fifteen Paris University Hospitals”, Pharm.World Sci. (1999), 21 (6): pp. 270–271.

- Bennett C L, Stinson T J, Laver J H, Bishop M R, Godwin J E and Tallman M S,“Cost Analysis of Adjunct Colony Stimulating Factors for Acute Leukemia: Can They Improve Clinical Decision Making?”, Leuk. Lymphoma. (2000), 37: pp. 65–70.

- Silverman L R, Demakos E P, Peterson B L, Kornblith A B, Holland J C, Odchimar-Reissig R, Stone R M, Nelson D, Powell B L, DeCastro C M, Ellerton J, Larson R A, Schiffer C A and Holland J F, “Randomized Controlled Trial Of Azacitidine In Patients With The Myelodysplastic Syndrome: A Study Of The Cancer And Leukemia Group B”, J. Clin. Oncol. (2002), 20 (10): pp. 2,429–2,440.

- Lowenberg B, van Putten W, Theobald M, Gmur J,Verdonck L, Sonneveld P, Fey M, Schouten H, de Greef G, Ferrant A, Kovacsovics T, Gratwohl A, Daenen S, Huijgens P and Boogaerts M, “Effect Of Priming With Granulocyte Colony-Stimulating Factor On The Outcome Of Chemotherapy For Acute Myeloid Leukemia”, N. Engl. J. Med. (2003), 349 (8): pp. 743–752.

Further Resources

Trending Topic

Welcome to the latest issue of touchREVIEWS in Oncology & Haematology. We are honoured to present a series of compelling articles that reflect cutting-edge developments and diverse perspectives in this ever-evolving field. This issue includes a series of editorials and reviews from esteemed experts who provide insights into novel therapies and their potential to change […]

Related Content in Leukaemia

Key points Olutasidenib induces durable responses in patients with relapsed or refractory (R/R) acute myeloid leukaemia (AML) with isocitrate dehydrogenase 1 (IDH1) mutations in the phase I and II clinical trials. The side effects of olutasidenib are well known and ...

Acute myeloid leukaemia (AML) is a heterogeneous haematological malignancy characterized by the presence of ≥20% blasts in bone marrow or peripheral blood or the presence of defined genetic abnormalities.1 In 2020, there were an estimated 21,450 new patients with AML and 11,180 AML-related deaths ...

Acute lymphoblastic leukaemia (ALL) is a group of malignant haematological disorders characterised by accumulation of lymphoid cell precursors that replace normal bone marrow elements and inhibit the production of functional blood cells.1 ALL occurs in both children and adults, and ...

Acute myeloid leukaemia (AML) is a complex and heterogeneous disease characterised by a multitude of molecular abnormalities. Better understanding of the mutational landscape has resulted in the development of targeted treatments in the last decade.1,2 AML is the most common ...

Chronic lymphocytic leukemia, diffuse large B-cell lymphoma, and follicular lymphoma are the three most common lymphoproliferative malignancies.1 All are B-cell malignancies and, although diverse in their underlying pathologies and clinical presentation, until recently have been treated the same way. The ...

Welcome to the summer edition of European Oncology and Haematology, a bi-annual journal that features a wide variety of topical articles of interest to oncologists and haematologists as well as the wider medical community. We begin with two editorials debating ...

Acute lymphoblastic leukemia (ALL) is of B cell precursor lineage (BCP-ALL) or, less commonly, T cell precursor lineage (T-ALL), which comprise multiple subtypes defined by structural chromosomal alterations that are initiating lesions, with secondary somatic DNA copy number alterations and ...

Acute myeloid leukaemia (AML) is a heterogeneous clonal disorder of haematopoietic progenitor cells resulting in uncontrolled growth and accumulation of malignant white blood cells. It is the most common myeloid leukaemia in adults, with a prevalence of 3–8 cases per 100,000 adults ...

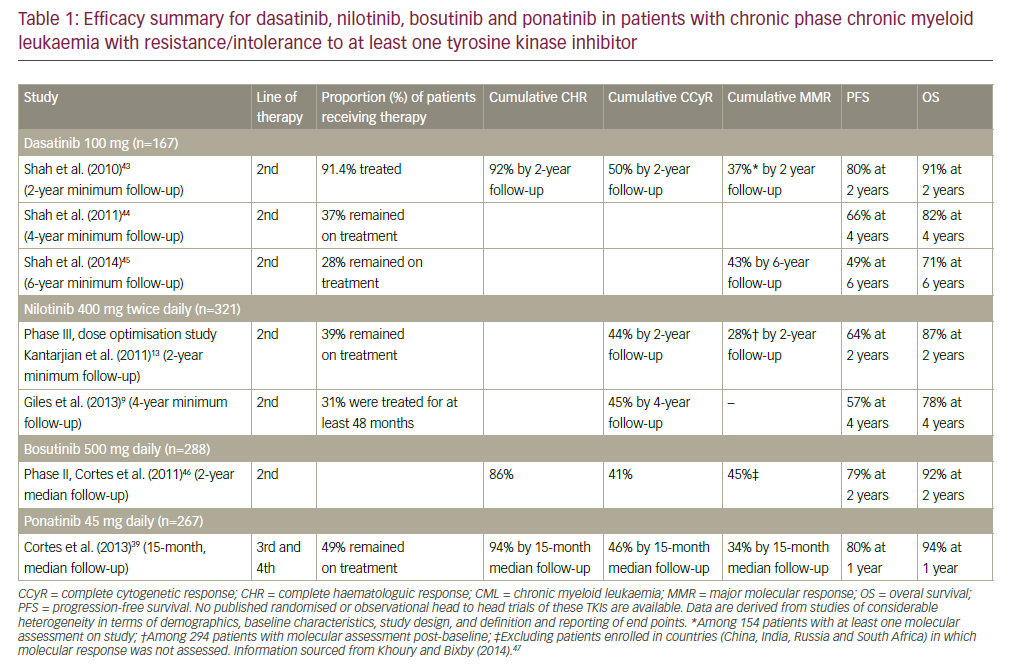

Imatinib (Gleevec®, Novartis Pharmaceutical Corporation, New Jersey, US) is a BCR-ABL1 tyrosine kinase inhibitor (TKI) that has dramatically improved the outcome of patients with chronic myeloid leukaemia in chronic phase (CML-CP).1 Although most of the patients with CML-CP do well ...

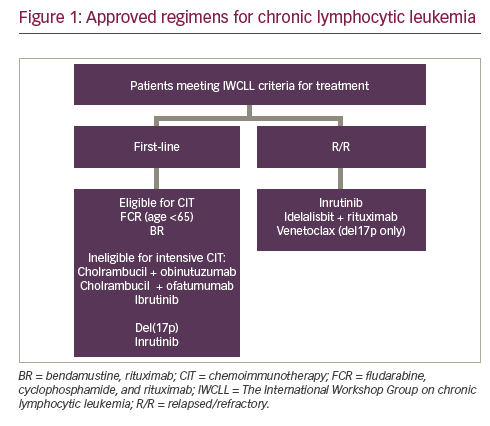

Chronic lymphocytic leukemia (CLL) is the most prevalent adult leukemia with an estimated 18,960 new cases diagnosed in the US in 2016.1 The past few years have witnessed major advances in the treatment of CLL with several new drugs receiving US Food ...

The treatment of patients with chronic phase chronic myeloid leukaemia (CP-CML) has changed dramatically with the advent of the first BCR-ABL tyrosine kinase inhibitor (TKI) imatinib (Gleevec®, Novartis Pharmaceutical Corporation, New Jersey, US) in the 1990s.1,2 Multiple studies demonstrated the ...

The overwhelming majority of patients diagnosed with chronic phase chronic myeloid leukaemia (CP-CML) can achieve long-term survival with a good quality of life. In a recent review, it has been suggested that CML patients responsive to tyrosine kinase inhibitors (TKIs) ...

Latest articles videos and clinical updates - straight to your inbox

Log into your Touch Account

Earn and track your CME credits on the go, save articles for later, and follow the latest congress coverage.

Register now for FREE Access

Register for free to hear about the latest expert-led education, peer-reviewed articles, conference highlights, and innovative CME activities.

Sign up with an Email

Or use a Social Account.

This Functionality is for

Members Only

Explore the latest in medical education and stay current in your field. Create a free account to track your learning.